Unveiling the Importance of Features in Data Science

Introduction

In this article, we will delve into a critical concept in this field: “Feature Importance.” This article is for beginners in Data Science who are just starting out in modeling and want to delve deeper into model results.

What is Feature Importance?

Imagine a scenario where you have numerous variables, but not all are equally important for the outcome of your model. This is where “Feature Importance” comes in. It is the technique of evaluating and ranking the variables in a dataset based on the influence they exert on the target variable of a predictive model. Understanding “Feature Importance” helps to simplify models, improve efficiency, and, most importantly, enhance the comprehension of the data.

Common Techniques of Feature Importance

Coefficient Analysis in Linear Models

In linear models, such as linear regression, the coefficients assigned to the variables can be interpreted as measures of importance. A high coefficient implies a strong influence on the dependent variable. For example:

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

import pandas as pd

# Loading the Iris dataset

iris = load_iris()

X = pd.DataFrame(iris.data, columns=iris.feature_names)

y = iris.target

# Adjusting the logistic regression model

model = LogisticRegression(max_iter=200)

model.fit(X, y)

# Visualizing the importance of features

coefficients = pd.Series(model.coef_[0], index=X.columns)

coefficients.plot(kind='barh')

plt.title('Feature Importance in Logistic Regression Model')

plt.xlabel('Coefficient')

plt.ylabel('Feature')

plt.show()

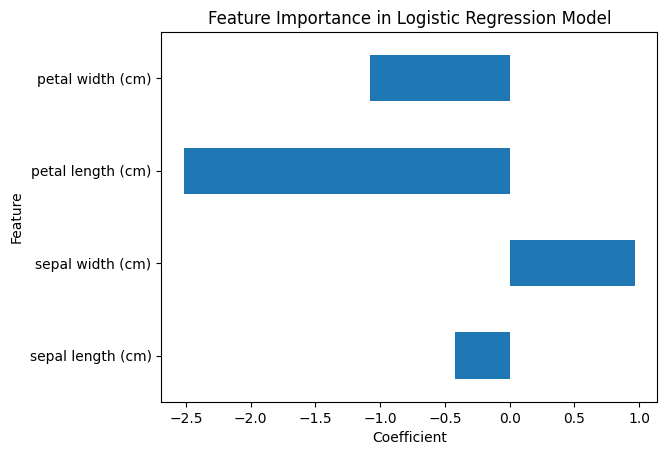

In the logistic regression example using the Iris dataset, we observe the importance of each feature through the model’s coefficients. Logistic regression, being a linear model, assigns a weight or coefficient to each feature, which can be interpreted as its relative importance.

In the generated graph, we can clearly see which features carry more weight. For example, if “petal width” has the highest coefficient, this indicates that it has the most significant influence in determining the iris class. This makes sense, as in the biology of irises, petal width and length are distinctive features among species.

It’s important to note that high coefficients can represent both a positive and negative influence, depending on the sign of the coefficient. A positive coefficient indicates that the higher the feature’s value, the greater its impact on the dependent variable, while a negative coefficient suggests the opposite.

This analysis helps us understand not just which features are important, but also how they influence the model’s prediction.

Feature Importance in Decision Trees

Decision trees and tree-based models, like random forests, offer direct insight into the importance of features. These models provide a score for each feature, indicating its usefulness in constructing the trees. For example:

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

import pandas as pd

import matplotlib.pyplot as plt

# Loading the Iris dataset

iris = load_iris()

X = pd.DataFrame(iris.data, columns=iris.feature_names)

y = iris.target

# Creating and training the random forest model

model = RandomForestClassifier(n_estimators=100)

model.fit(X, y)

# Visualizing the importance of features

importances = pd.Series(model.feature_importances_, index=X.columns)

importances.nlargest(4).plot(kind='barh')

plt.title('Feature Importance in Random Forest')

plt.show()

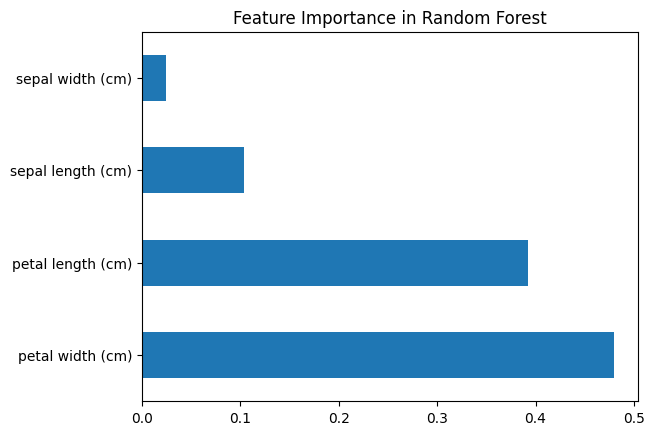

In the example using the Random Forest model, the importance of features is determined based on how useful they are in constructing the decision trees. Unlike logistic regression, this technique is not based on linear coefficients but on the effectiveness of each feature in reducing tree impurity.

The chart shows that certain features, like “petal length” and “petal width,” are more significant for the model. These features likely provide the most informative splits and help the model effectively differentiate between the classes.

This analysis is crucial for understanding how the model makes its decisions and which data characteristics are most strongly associated with the different iris categories. It can also guide future data collection and feature preparation, prioritizing those that are most informative for the model.

Final Considerations

“Feature Importance” is a valuable tool in any data scientist’s arsenal. It not only improves the performance of models but also provides deeper insights into the data. We recommend that readers apply these techniques in their own projects for a more practical and in-depth understanding.